Organize the parameters of 3D Gaussian Splatting (3DGS) scenes into a 2D grid and enforce local smoothness during training. Then leverage off-the-shelf image compression to store the attribute images for a high compression rate. This beats most concurrent compression methods in size, and all other methods in quality. And the file format and decoding is dead simple.

The goal of compressing 3DGS scenes is to provide a compact representation that can be rendered into the training & test views, without losing visual detail. We are not bound to a specific configuration of Gaussian splats: we can mold the splats during training to be well-compressible. We leverage this to enforce neighboring splats to share attributes, e.g. neighbors in xyz sharing the same rotation values.

A 30-minute talk about Self-Organizing Gaussians given at the ECCV 2024 Redux Series at Voxel51.

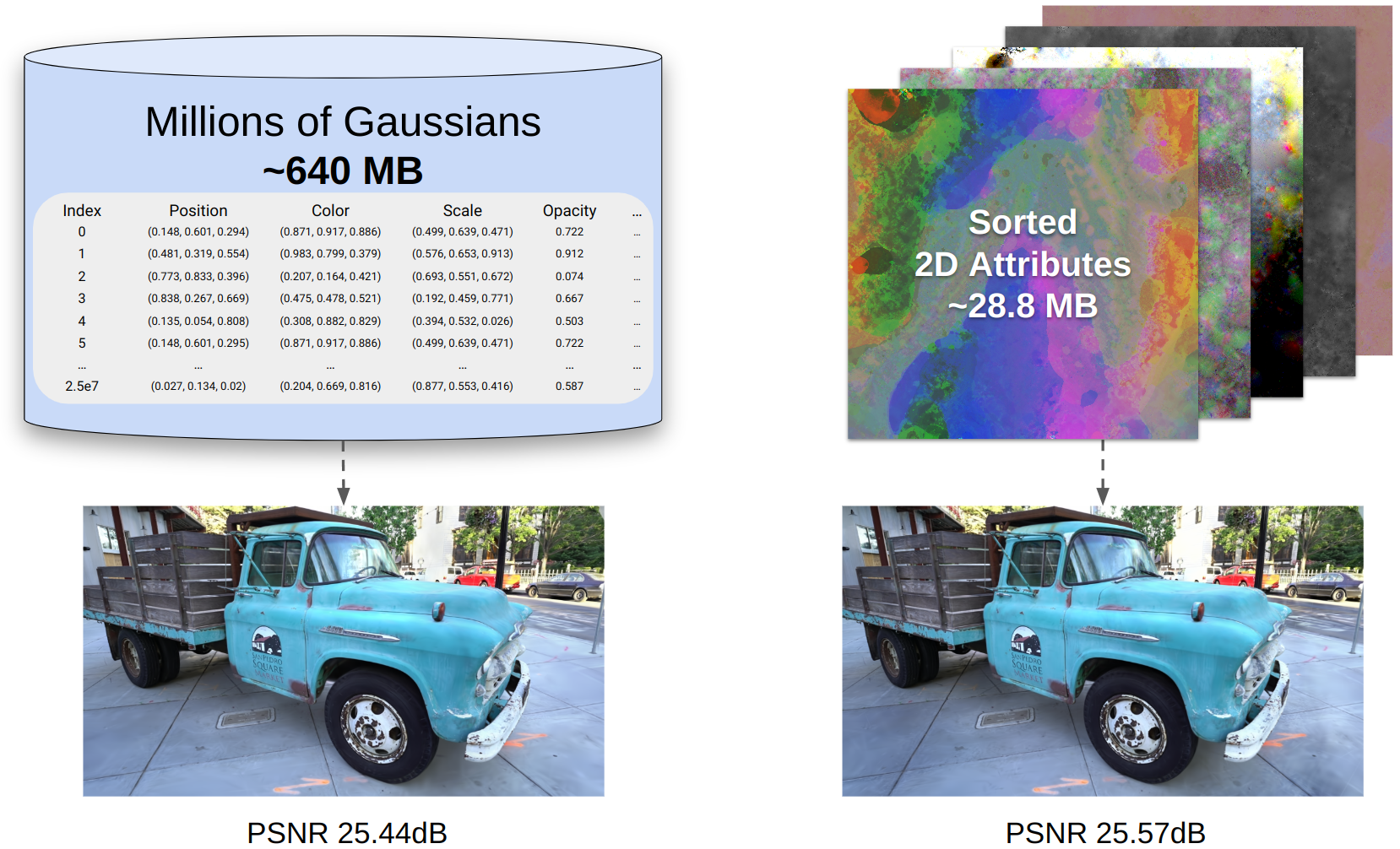

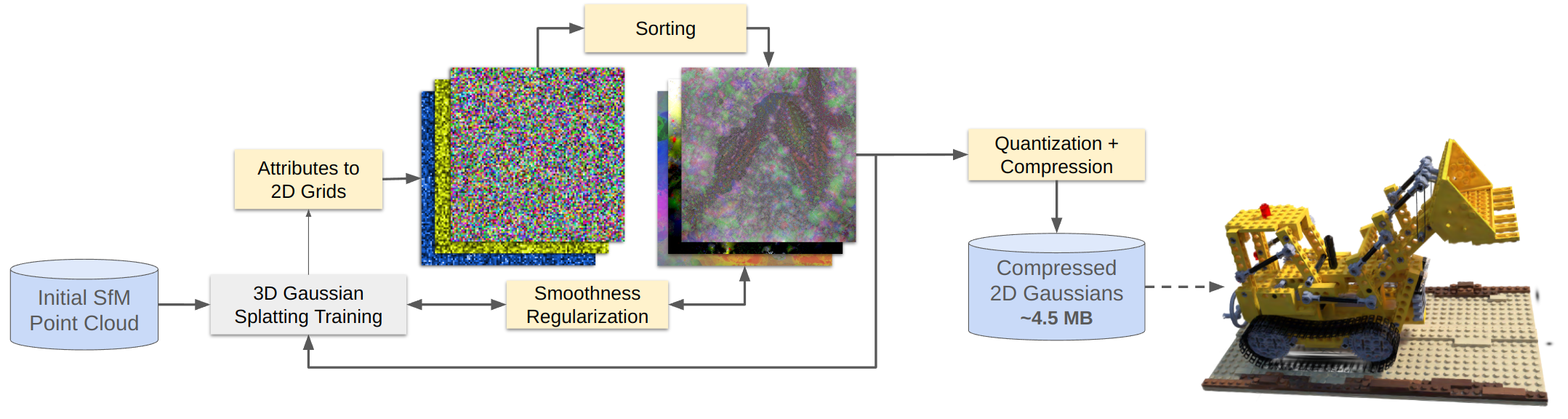

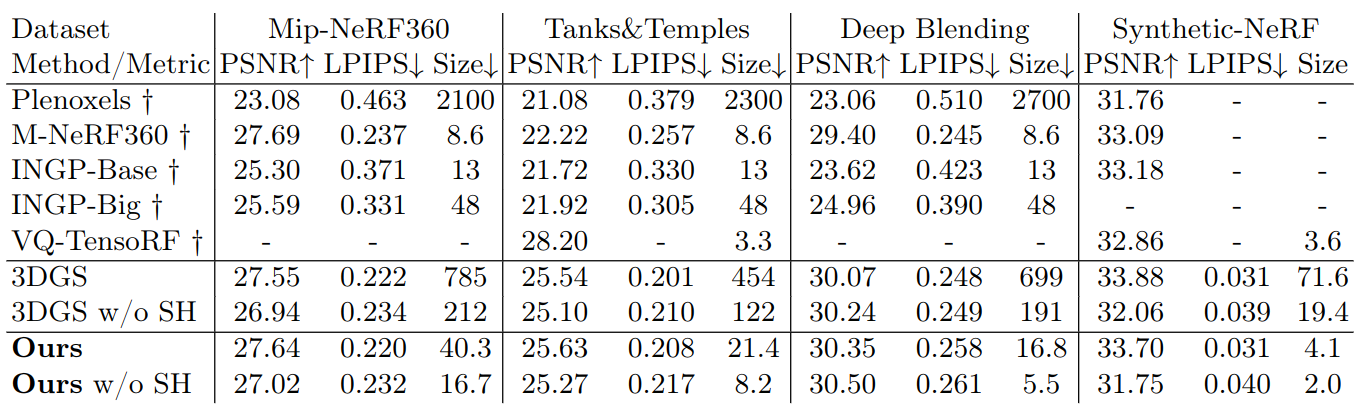

3D Gaussian Splatting has recently emerged as a highly promising technique for modeling of static 3D scenes. In contrast to Neural Radiance Fields, it utilizes efficient rasterization allowing for very fast rendering at high-quality. However, the storage size is significantly higher, which hinders practical deployment, e.g. on resource constrained devices. In this paper, we introduce a compact scene representation organizing the parameters of 3D Gaussian Splatting (3DGS) into a 2D grid with local homogeneity, ensuring a drastic reduction in storage requirements without compromising visual quality during rendering. Central to our idea is the explicit exploitation of perceptual redundancies present in natural scenes. In essence, the inherent nature of a scene allows for numerous permutations of Gaussian parameters to equivalently represent it. To this end, we propose a novel highly parallel algorithm that regularly arranges the high-dimensional Gaussian parameters into a 2D grid while preserving their neighborhood structure. During training, we further enforce local smoothness between the sorted parameters in the grid. The uncompressed Gaussians use the same structure as 3DGS, ensuring a seamless integration with established renderers. Our method achieves a reduction factor of 19.9x to 39.5x in size for complex scenes with no increase in training time, marking a substantial leap forward in the domain of 3D scene distribution and consumption.

An overview of our novel 3DGS training method. During training, we arrange all high dimensional attributes into multiple 2D grids. Those grids are sorted and a smoothness regularization is applied. This creates redundancy which help to compress the 2D grids into small files using off-the-shelf compression methods.

An hour-long conversation with Michael Rubloff and MrNeRF in the View Dependent Podcast about 3DGS compression, why the Gaussians are Self-Organizing, and how this project came to be.

Multiple concurrent methods have been developed for compressing 3D Gaussian splats. Commonly, these methods aim to reduce the number of Gaussians needed for the scene and apply quantized vector compression. Unlike other approaches that utilize codebooks or hash grids for vector coding, our method is unique in organizing the Gaussians into locally smooth 2D grids during training.

This grid organization during training enables the use of standard image compression techniques to code the attributes. This simplifies decoding: one simply decompresses the grid attribute images and applies a rescaling. Each pixel from the coded images then corresponds to a set of Gaussian splatting attributes, which can be rendered with any standard 3DGS render engine.

As of July 2024, our method (Morgenstern et al.) achieves the highest quality for the compared datasets, second only in size to the HAC method, also presented at ECCV 2024.

We invite you to explore other methods for compressing Gaussian splats and compare their approaches in this survey:

If you use our method in your research, please cite our paper. The paper was presented at ECCV 2024 and published in the official proceedings in 2025. You can use the following BibTeX entry:

@InProceedings{morgenstern2024compact,

author = {Wieland Morgenstern and Florian Barthel and Anna Hilsmann and Peter Eisert},

title = {Compact 3D Scene Representation via Self-Organizing Gaussian Grids},

booktitle = {Computer Vision -- {ECCV} 2024},

year = {2025},

publisher = {Springer Nature Switzerland},

address = {Cham},

pages = {18--34},

doi = {10.1007/978-3-031-73013-9_2},

url = {https://fraunhoferhhi.github.io/Self-Organizing-Gaussians/},

}